Experts examine data fusion as a powerful predictive decision-making tool for mapping a crowded space environment, and the imperative of tying it to specific requirements versus viewing it as a be-all solution.

ATLANTA — As space grows more crowded and more contested, finding the best way to track objects in orbit becomes increasingly challenging. Relying on multiple sensors and “fusing” this data to achieve more precise real-time positioning is a key capability, especially for U.S. Space Force and Department of Defense (DoD) decision-makers.

Crews of the National Space Defense Center provide threat-focused space domain awareness across the national security space enterprise. (Source: U.S. Space Force/Kathryn Damon)

Crews of the National Space Defense Center provide threat-focused space domain awareness across the national security space enterprise. (Source: U.S. Space Force/Kathryn Damon)

“We are competing against thinking, adaptive adversaries all the time. The challenge of delivering the most relevant information to the decision-makers the fastest is greater than it’s ever been, despite the technology we have at our disposal,” says Col. Garry Floyd, a career intelligence officer who currently serves as Department of the Air Force Director of the DAF-MIT Artificial Intelligence Accelerator (AIA) in Cambridge, Mass. “At the end of the day, the quest is one for certainty—being able to make decisions where there is certainty.”

Data fusion, or combining streams of information from multiple sensors and associated databases to get a clearer situational picture of the space domain, is emerging as a powerful tool for more accurate, actionable decision-making.

“Individual data points don’t tell the entire story,” explains Michael Clonts, director of Kratos’ strategic initiatives for RF signal-based space domain awareness. “If you think of the space environment like a jigsaw puzzle, each SDA sensor shows you one piece of that puzzle. You may need to fuse dozens or hundreds of pieces together before the picture really becomes apparent.”

Avoiding Pitfalls of Over-hype

Before fusing data from multiple sources, it’s best to first look for specific and repeatable use cases or systems. Otherwise, data fusion could be overhyped and fail to meet market expectations, says Darren McKnight, senior technical fellow for LeoLabs, a leading commercial provider of LEO mapping and SSA services.

He points to the Gartner Technology Hype Cycle, which repeatedly predicted the early stumbles of technologies from cryptocurrency and blockchain to artificial intelligence (AI) when they were applied too broadly to the wrong types of problems. Data fusion may experience a similar fate if users don’t apply it as an enabler to meet requirements, he predicts.

Operators must put in the resources, including people, to engineer the standards, data flow and approvals needed for this tool to achieve its full potential. However, despite the risks of not taking the time to be strategic about data fusion, most experts feel that the technology is here to stay, as it is already delivering value to the DoD and commercial companies, especially for Space Situational Awareness (SSA).

One challenge likely to emerge with data fusion advances is the push and pull of physical capabilities in the space environment versus the data and insights available on the ground.

“If the combined sensors tell me what's going to happen over the next 10 minutes, but It takes 20 minutes for the spacecraft to react, we will need analytics and insights to be more predictive or more capable spacecraft,” says Brad Grady, research director for NSR, an Analysys Mason Company.

“Data fusion is a big buzzword. And it's not just about space situational awareness; we're seeing data fusion requirements as a driver also on the connectivity layer for battlefield situational awareness. On the military side, we’re seeing a lot of interest in being able to ingest commercial sensors, commercial systems and commercial information into this bigger situational awareness picture,” he adds.

Examining Data Fusion Types, Uses

There are three types of data fusion – sensor, feature and decision-making, which are done two ways: algorithmically and visually.

“Algorithms guide the first two types of data fusion—sensor and feature fusion—by feeding sensor data into an equation or algorithm to get a more accurate answer, while decision-making fusion relies on visualization,” McKnight explains.

Sensor fusion might determine the current state or characteristic of an object in orbit by inputting data from different sensors to track its current location.

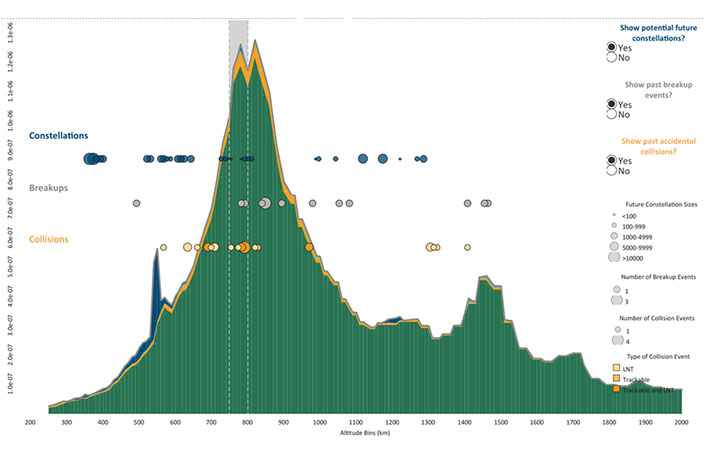

An example of a decision fusion dashboard is the LeoRisk display, an analytic representation of space debris in LEO overlaid with indicators of likely future constellation deployments, a depiction of past significant breakup events and possible mission-terminating collisions with nontrackable debris. (Source: LeoLabs)

An example of a decision fusion dashboard is the LeoRisk display, an analytic representation of space debris in LEO overlaid with indicators of likely future constellation deployments, a depiction of past significant breakup events and possible mission-terminating collisions with nontrackable debris. (Source: LeoLabs)

In contrast, feature fusion predicts the future probability of an event, such as the likelihood of a collision or how fast an object is tumbling. To illustrate, feature fusion might look at a conjunction data message tracking two objects in orbit to estimate the probability of them colliding. This type of scenario requires gathering information about the two objects’ motion, shape and orientation, and rolling that data into another equation or algorithm.

Decision-making fusion, a graphical overlay of different sources together, is comparable to how one might do a SWOT (strengths, weakness, opportunities and threats) analysis. An example of decision-making fusion at LeoLabs includes creating a spatial density curve in LEO, or a calculation of all objects in Low Earth Orbit and their movement over time.

Overlaying this curve is other data—locations of future constellations, breakup events and hypothetical collisions that collectively help give operators an idea of where future problems are likely to occur. That type of insight is critical for LeoLabs, which, in September, was tapped by the U.S. Commerce Department to help it develop a new space traffic management system to safeguard civil, commercial and non-U.S. satellites, a role previously managed by the DoD.

“LeoLabs uses data fusion as part of our capability and doing that quickly requires you to have standard formats and to understand the uncertainty of the data going in, so that you know when you add them together, you know what you get coming out,” McKnight says.

Floyd understands well how important it is to have an information advantage for making decisions in the field: he most recently led ISR operations as commander of the 694th Intelligence, Surveillance and Reconnaissance Group at Osan Air Base, Republic of Korea.

He observes that guardians actively monitor channels, but are limited by their force size compared with the vast amounts of data being tracked. “Inevitably, something goes to the server to be looked at later but later is too late sometimes,” he says.

The use case or application often dictates whether fusing sensor data makes sense. For instance, an operator considering data sensor fusion to better identify the location of an object in space may think to fuse optical and radar data. However, optics have limitations since weather or the sun’s angle could obscure an object’s visibility, whereas radar offers more consistency since it can see an object 24/7 day or night as it passes within the field of view.

But what if a user wants to determine the dynamic state of a satellite, such as whether it is operational or tumbling? In that case, data fusion with radar, optical and RF sources makes sense since optical can track an object’s changing position or rotation over time based on the observation track.

Combining Commercial Data

Data fusion remains a core capability as the DoD invests in future satellite networks. The Pentagon’s POET, or Prototype On-orbit Experimental Test Bed, launched last June and will remain in Low Earth Orbit for five years. As part of the Space Development Agency’s plans to develop a satellite network that can gather data from multiple sensors on the ground, POET will integrate with a third-party multiple intelligence (multi-INT) data fusion software application in a LEO satellite modular and upgradable mission software running in an edge processor.

Director of the Air Force Research Laboratory Space Vehicles Directorate Col. Jeremy Raley is working with industry on data fusion solutions and user-friendly systems. (Source: AFRL)

Director of the Air Force Research Laboratory Space Vehicles Directorate Col. Jeremy Raley is working with industry on data fusion solutions and user-friendly systems. (Source: AFRL)

Col. Jeremy Raley, commander of Phillips Research Site and director of the Air Force Research Laboratory Space Vehicles Directorate at the Kirtland Air Force Base, says his team is in ongoing discussions with commercial and industry players on how best to leverage innovative data fusion approaches.

“How do we enhance the cognitive capabilities of human beings who have to deal with the data? That’s what I want out of data fusion and that’s what will allow us to accomplish the mission we’ve been given with the number of guardians that we’re authorized to have in the Space Force. Otherwise, with proliferating constellations and new orbital regimes like cislunar entering the mix, it will be overwhelming for us to track in the same sort of ways we’ve done in the past.”

Previously, the Air Force was much more centered on tracking known objects and performing a manual search if an object wasn’t where the operator expected it to be. Data fusion approaches allow operators to keep track of objects based on identifying characteristics and/or more frequent observations around maneuvers.

In the near term, Col. Raley, in his role overseeing the AFRL’s Space Vehicles Directorate, is working to ensure that data fusion systems are user-friendly from a network perspective, so guardians with operational command and control oversight can use the systems without being network management experts.

“To that end, I’m really doubling down on working with AFRL’s Information Directorate in Rome, New York, and our partners at Space Systems Command to go after those pieces,” he says.

Explore More:

Why DIA Wants More Commercial SDA Partners

Podcast: Collision Avoidance Services and Space Domain Awareness

SDA Is Opening Eyes with Advanced Tech and Investment Opportunities